Hi Friends ,

Sometime back, there was a request on the MSDN forums where the OP wanted to archive the replicated partitioned tables in the publisher database . I think it would be good to share the solution with everyone in this forum as well .I have used SQL Server 2008 Evaluation Edition SP1 , Build 10.0.2531 for my testing .

In this post we will see :

Part 1) archiving the replicated non-partitioned (normal) tables .

Part 2) archiving the replicated partitioned tables .

At the end ,you will notice one nice to know feature of partitioned tables .

Part 1) Archiving the replicated non-partitioned (normal) tables .

Publisher : DB2Migration

Subscriber : DB2Migration_Sub

Replication Topology : Transactional Replication

Articles : dbo.Test

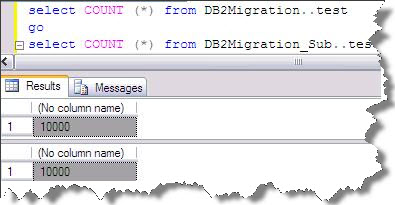

Other details : Both tables have 10000 rows each after first synchronization.

Now we need to archive the test table in publisher database but want to keep the Subscriber untouched i.e. the rows in subscriber should not change .For this example we will delete all the rows of the publisher table .

How should we do it ?

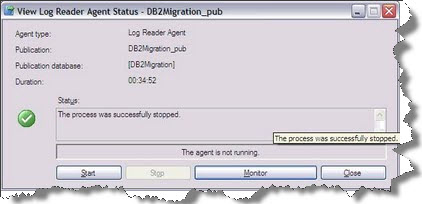

If I delete any row on publisher , the same will be replicated to subscriber . One way might be to stop the log reader agent and then delete the rows .After this , I can fire sp_repldone on the publisher and start the logreader agent .yes this is perfectly achievable . Here we go …

DBCC opentran will show:

No active open transactions.

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

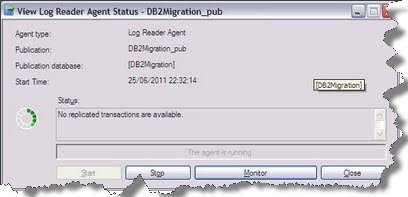

We will enable the LogReader agent now and will see that there are no transactions to be replicated.

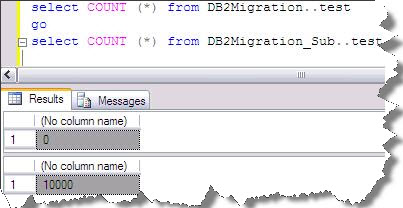

Select count * from both the tables will show 0( zero ) and 10000 rows respectively .

After this we will insert 1000 rows in publisher table ( we need to be careful as the tables have primary key).As a result the subscriber now, has 11000 rows and publisher has 1000 rows .This is going to be costly when there will be millions of rows because delete (or update or insert )is a logged activity.There is one more drawback and perhaps more critical.The log reader agent is one per database .So if there are more than one publications on the same database and we run sp_repldone , then we will hurt other subscriptions and publications .So we have to be careful. Other way is to truncate the table (after moving the data to an archived table) but replicated tables cannot be truncated (why ???…..simple , Truncating a table is logged the deallocation of the pages but the individual records are not logged as deletes. Metadata consistency is not maintained between publisher and subscriber, so there is no way for replication to know whether the allocated pages all contained the same data).So to truncate the table ,you need to remove the article from the publication .If you want to do that the steps are :

*******TEST THIS BEFORE IMPLEMENTING IT IN PRODUCTION********

1) Stop the log reader agent and distribution agent

2) Drop the article(s) from the publication

3) Archive the table to another table ( this will be a logged activity ) by Bulk insert or BCP or import export wizard

4) Truncate the table 5) Add the article again

6) Change the properties of all the articles in the publication properties to “Keep existing object unchanged” for option action “if name is in use ” .This is the most important step and please cross check it a few times to make sure that “Keep existing object unchanged ” is set

6) generate the snapshot again ….

7) start the log reader agent and distribution agent and initiate the new snapshot ….

*******TEST THIS BEFORE IMPLEMENTING IT IN PRODUCTION********

Trust me .you are done 🙂 ..But don’t you think its lengthy and a bit risky ..Now lets see something new …

Part 2) Archiving the replicated partitioned tables .

Let us first create 2 new databases followed by creating partition functions followed by partition schemes followed by partitioned tables followed by inserting data in the tables .

--creating database and filegroups create database test GO ALTER DATABASE test ADD FILEGROUP [second] GO ALTER DATABASE test ADD FILEGROUP [third] GO ALTER DATABASE test ADD FILEGROUP [forth] GO ALTER DATABASE test ADD FILEGROUP [fifth] GO --Adding new files to the filegroups USE [master] GO ALTER DATABASE test ADD FILE ( NAME = N'test2', FILENAME = N'C:\Program Files\Microsoft SQL Server\test2.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [second] GO ALTER DATABASE test ADD FILE ( NAME = N'test3', FILENAME = N'C:\Program Files\Microsoft SQL Server\test3.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [third] GO ALTER DATABASE test ADD FILE ( NAME = N'test4', FILENAME = N'C:\Program Files\Microsoft SQL Server\test4.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [forth] GO ALTER DATABASE test ADD FILE ( NAME = N'test5', FILENAME = N'C:\Program Files\Microsoft SQL Server\test5.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [fifth] GO --The following partition function will partition a table or index into four partitions. USE test GO CREATE PARTITION FUNCTION [PF_test](int) AS RANGE LEFT FOR VALUES (1,100,1000) -- Creating partition scheme use test GO IF NOT EXISTS (SELECT * FROM sys.partition_schemes WHERE name = N'PS_test') create PARTITION SCHEME [PS_test] AS PARTITION [PF_test] TO ([second],[third],[forth],[fifth]) view source print --[Note if you want to have one filegroup for all the files then : create PARTITION SCHEME [PS_test] AS PARTITION [PF_test] All TO ([secondary]) ] --creating table with constraint and assigning a partition scheme to it create table test (dummy [int] primary key constraint test_c check ([dummy] > 0 and [dummy] <=20000)) on ps_test (dummy) --inserting values declare @val int set @val=1000 while (@val > 0) begin insert into test..test values (@val) set @val=@val-1 end --On Subscriber we will only create the same filegroups and add files to them : --creating database and filegroups create database test_sub GO ALTER DATABASE test_sub ADD FILEGROUP [second] GO ALTER DATABASE test_sub ADD FILEGROUP [third] GO ALTER DATABASE test_sub ADD FILEGROUP [forth] GO ALTER DATABASE test_sub ADD FILEGROUP [fifth] GO --Adding new files to the filegroups USE [master] GO ALTER DATABASE test_sub ADD FILE ( NAME = N'test_sub2', FILENAME = N'C:\Program Files\Microsoft SQL Server\test_sub_sub2.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [second] GO ALTER DATABASE test_sub ADD FILE ( NAME = N'test_sub3', FILENAME = N'C:\Program Files\Microsoft SQL Server\test_sub_sub3.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [third] GO ALTER DATABASE test_sub ADD FILE ( NAME = N'test_sub4', FILENAME = N'C:\Program Files\Microsoft SQL Server\test_sub_sub4.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [forth] GO ALTER DATABASE test_sub ADD FILE ( NAME = N'test_sub5', FILENAME = N'C:\Program Files\Microsoft SQL Server\test_sub_sub5.ndf' , SIZE = 2048KB , FILEGROWTH = 1024KB ) TO FILEGROUP [fifth] GO

After this, create the publication on database TEST and add the article TEST. Once It’s done, the Test_Pub Publication is ready to publish. After this we will create the Subscription on this publication .Our subscriber database is test_sub.Once the initial snapshot is synchronized you will see the following values:

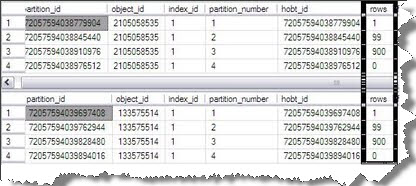

select OBJECT_ID('test..test')

select OBJECT_ID('test_sub..test')

select * from test.sys.partitions where object_id in (2105058535) order by partition_number

select * from test_sub.sys.partitions where object_id in (133575514) order by partition_number

So as of now everything is as per plan . The data is synchronized in the correct partitions .Now if we need to archive the publisher table we can try the same old approach that we used in stage 1 .However , we will try something new here .That something new is SWITCHING OF PARTITIONS in the table .I will not explain what does it means because you will see it in few seconds(or you can refer BOL) .Lets first create the archive table on publisher .It’s the replica of the original test table

create table test..test_archive (dummy [int] primary key constraint test_c_a check ([dummy] > 0 and [dummy] <=20000)) on ps_test (dummy) --Switching the partitions from test to test_archive table on publication ALTER TABLE test..test SWITCH PARTITION 1 TO test_archive Partition 1; GO

Msg 21867, Level 16, State 1, Procedure sp_MStran_altertable, Line 259

ALTER TABLE SWITCH statement failed. The table ‘[dbo].[test]’ belongs to a publication which does not allow switching of partitions

Oopsss , What happened…Yes , that is true , we cannot switch the partitions for the replicated table , unless ……….we explicitly allow partition switching for the publisher :

sp_changepublication 'test_pub' ,@property='allow_partition_switch',@value='true'

You will get this message

The publication has updated successfully.

Switching the partitions from test to test_archive table on publication. This is for partition number 1 . We have 4 partitions.

ALTER TABLE test..test SWITCH PARTITION 1 TO test_archive Partition 1; GO ALTER TABLE test..test SWITCH PARTITION 2 TO test_archive Partition 2; GO ALTER TABLE test..test SWITCH PARTITION 3 TO test_archive Partition 3; GO ALTER TABLE test..test SWITCH PARTITION 4 TO test_archive Partition 4; GO

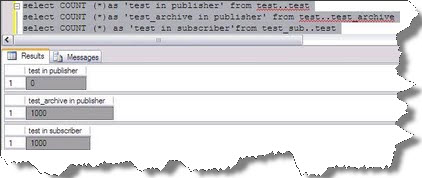

Now , just check the number of rows in the tables test and test_archive on publisher test and the test table on subscriber test_sub:

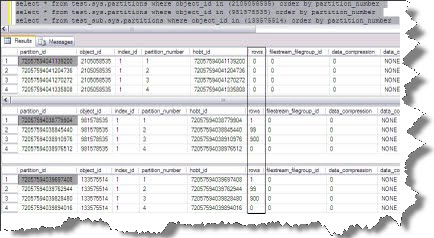

Thats the magic :)..Did you also notice that we did not create any partitions for the test_archive table .Lets query the syspartition DMV and notice the partitions in test_archive table.

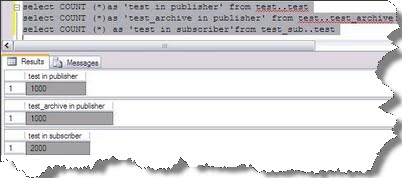

Thats the beauty .You did not have to delete or truncate a single row.Nor did you remove the article or stopped any agent . Now if you add rows to table TEST it will be replicated to subscriber as usual .lets try this by inserting 1000 rows in table test in the publisher and then check the subscriber table.

inserting new values in test table ( in publisher database )

declare @val int set @val=2000 while (@val > 1000) begin insert into test..test values (@val) set @val=@val-1 end

Hope you have found this post helpful .

Regards

Abhay Chaudhary

Like us on FaceBook | Follow us on Twitter

Follow me on FaceBook | Join the fastest growing SQL Server group on FaceBook

Very nice & informative post Abhay, A great start.

Welcome to SSG.

Thanks Sarab. 🙂

Nice post Abhay !

want to write blog in your community but i cant find where to start and where i have to create .please help me Thanks&Regards Siva G

Hi Siva,

its really good that you want to write for SQLServerGeeks.com, but blog section is not enabled for every member by default.

In case you want to be one of the author with SSG, please get in touch with Admin by writing into AdminSQLServerGeeks.com.

Thanks.

Sarab

Thanks Amit ..

One correction. Truncating a table is logged. It logs the deallocation of the pages. I believe what you were intending to say is that the individual records are not logged as deletes. Metadata consistency is not maintained between publisher and subscriber, so there is no way for replication to know whether the allocated pages all contained the same data. Therefore, it can’t replicate this type of operation.

Absolutely Robert .Its is in deed .Inface it can be rollled back as well. 🙂

I was basically trying to convey the same message that you conveyed ..But I have corrected it now anyway ..